Our long-running campaign A Quest of Queens is nearing 50 sessions after four years. This campaign is the very first one we ran in OSR+, and needless to say it has a lot of baggage: dozens of NPCs of varying importance, multiple BBEGs with evil agendas, several world-ending plots that are still unfolding, and a slew of ever-branching character arcs for the PCs that have exploded in complexity over the course of the campaign. While I record every session we play so I can watch the previous session before kicking off a new one, I face a challenge as we approach that 50th session: how do I locate all those unresolved adventure hooks so I can tie them up before the campaign’s conclusion? Re-watching 150 hours of footage is not an option. And my adventure notes, while meticulous, aren’t concerned with what happened so much as what can happen. The answer, in 2024, is nothing less than magical. And that magic is AI.

Hey! Don't groan. You may be AI'ed out since the buzz about it is everywhere. But you really shouldn't be.

I could go on and on about how insanely powerful the tools at our disposal are today, but it would take several more blog posts to convince you. Instead, I invite you to adopt a problem-solution mentality for now. We have a problem in front of us: the herculean task of cataloguing information that is plainly impossible for one Game Master to do before next month's session. But with a few free tools you can install on your very own computer right now, it's become a solvable task. And that's the real magic.

In this post, I'll show you how to go from tens of three-hour recordings of RPG sessions to a fully-queryable knowledgebase of the actual events of your campaign. And when I say "fully-queryable," I mean you can talk to your campaign like you would ChatGPT; ask for a summaries of sessions or what was at stake or who did what—you can even glean literal quotes from the sessions and recall exactly what was said.

Let's get started.

Requirements, Process, & Tools

- I use Windows, and while it's possible to do everything below on a Mac or Linux, I can't help you if you use those operating systems.

- In order to run Whisper AI on your computer to perform the transcription, you'll need a dedicated Nvidia video card with at least 8gig of VRAM.

- Finally, you have to be willing to follow instructions on Github and install things on your computer. ChatGPT will be your friend if you get esoteric errors!

Overview of the Process

I currently have 44 recordings of our sessions. We record with full video, but this will still work if you just have audio files. Each of my sessions tend to run between three and four hours long. Here's the gist of what we're going to do:

- First, we transcribe each session into a text file with Whisper AI. This produces a text transcript of everything said in the video, where each person's dialogue is written out with timestamps and an identifier to associate each line of dialogue with a speaker.

- Next, we merge those transcriptions together into a single text file that combines the speakers, ordered by timecode. We use a custom Python script for this that I'll share.

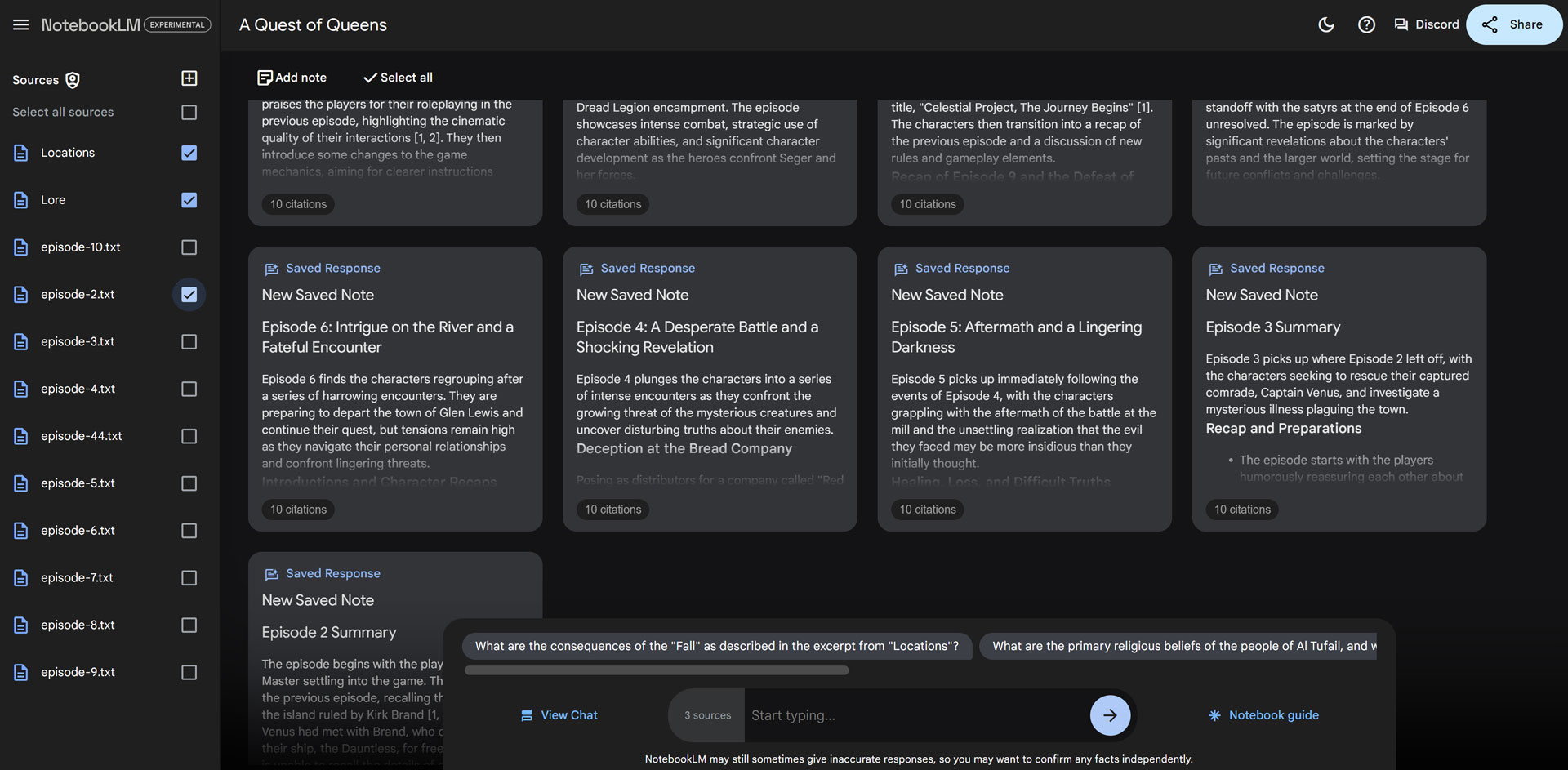

- Once we have the transcript, we feed it into a free service called NotebookLM from Google, which uses the Google's LLM called Gemini 1.5 Pro. NotebookLM allows us to have up to 50 "documents" inside a single "notebook" that you can then ask questions about using the LLM. This notebook becomes our campaign's knowledgebase.

- Finally, we can ask Gemini questions about the campaign in the same way we might ask questions of ChatGPT. "Please write a summary of session 4" for example, or "Provide some quotes from Fodel Bersk when he was fighting Lord Paimon in session 44."

As you can see, you can choose what documents you want to prompt against when you chat with the LLM. So I can ask Gemini what happened in sessions 4 and 5 specifically. The more detailed my prompt, the more detailed the response. And I can also upload related documents to give the AI further context, such as background lore about the game world, or information about locations that the players encountered in the game.

I uploaded all of the lore posts from the Quest of Queen's campaign setting on the website, as well as all the text of each location in two documents called "lore" and "locations." This way I can prompt about a specific session plus the lore and locations, to give the AI more context.

The Tools You Need

THe Audio/Video Input Format(s)

My recording process evolved over the years, so the format of the videos changed over time. In the very early days, we captured video straight from Discord via OBS, so our video files had a single mixed audio track of all the players, a second track that was my audio as the Game Master, and a third that was just background music.

Later on in the campaign, we used a bot called Craig in Discord to separate the audio of each player into his own track. This produced flac files in addition to the video recording with the aforementioned tracks.

Today, we capture a single video file with separate tracks inside it for each player and the game master, because we conference via VDO Ninja and pipe the recording into OBS. VDO Ninja is a free peer-to-peer service that can capture streams remotely at high definition—it's pretty amazing and you should check it out.

All this to say: any of these audio or video formats can work. If you only have a single mp3 or mp4 of your recording that has everyone's voices on it, you'll need diarization to be part of your transcription process, which means the AI will have to identify and separate the speakers on the fly when it makes the text file. Whisper AI can do this, as can Adobe Premiere Pro. I will go over how this changes things up at the end of this post, but in the interim, I'm operating under the assumption that you're starting with separate tracks of audio for each player and the GM.

Transcribing Audio with Whisper

Whisper is an "automatic speech recognition (ASR) system trained on 680,000 hours of multilingual and multitask supervised data collected from the web" by OpenAI. It's crazy accurate and open source, so you can use it on your own machine to perform transcriptions.

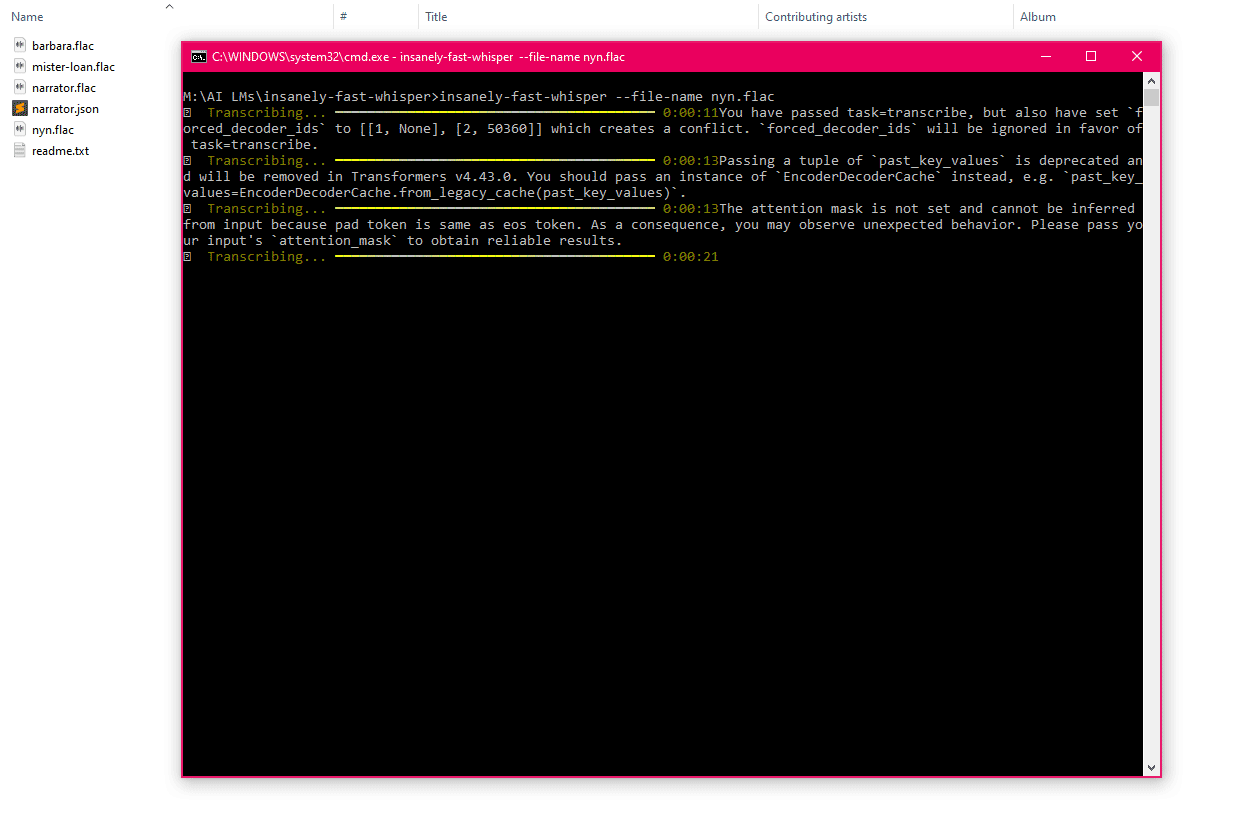

I use an implementation called Insanely Fast Whisper, written in Python. It enables you to use the Windows command prompt to target audio files on your computer and turn them into text transcriptions.

First, you'll need to install Python, which is the language that the Insanely Fast Whisper implementation is written in. Then, you can follow the steps in the Github to install Whisper.

Once it's installed, open the Windows command prompt and navigate to the directory where your audio files are:

cd /path-to-my-files/Then you can run the following command:

insanely-fast-whisper --file-name game-master-track.mp3Whisper then transcribes the audio file into a JSON file called output.json, which is a specially structured text file of all the dialogue in the audio file. Rename output.json to whatever track it represents (in this case, game-master.json). You want to do this for all your tracks.

Merging the Transcribed Tracks

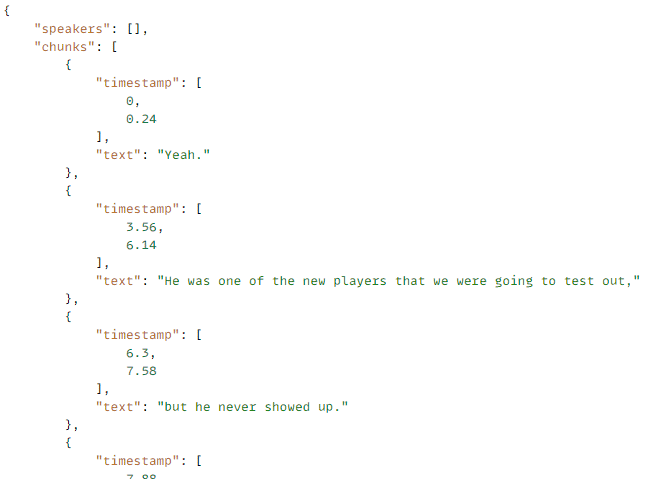

By now, you should have a json file for each player and one for the GM. (Let's assume you have four players: barbara.json, mister-loan.json, nyn.json, fodel.json and game-master.json.) If you open any of these files in a text editor, you'll notice the dialogue is structured like so:

In order for NotebookLM to make sense of a single session, we need to create a single text file that merges together each of these transcriptions in the proper timestamp order. This way NotebookLM can read all the dialogue in order, with each line of dialogue identified by the speaker's name.

What we'll do is write a Python script that does this for us.

Because I'm a web developer, I happen to have written my original script in PHP. Actually: ChatGPT wrote the script, because I was too lazy to write it myself! Instead, I've included below a Python script that you can run in the Windows command prompt, as that won't require you to set anything else up. (I asked ChatGPT to convert the PHP script to Python.)

import json

import os

import sys

# Function to convert floating-point timestamp to [HH:MM:SS:FF] format

def convert_timestamp(float_time, frame_rate=30):

if float_time is None:

return "00:00:00:00" # Default value if the timestamp is None

hours = int(float_time // 3600)

minutes = int((float_time % 3600) // 60)

seconds = int(float_time % 60)

frames = int((float_time - int(float_time)) * frame_rate)

return f"{hours:02}:{minutes:02}:{seconds:02}:{frames:02}"

# Function to parse a JSON transcript file and return an array of start time, end time, speaker, and text

def parse_json_transcript(file_path, speaker, frame_rate=30):

try:

with open(file_path, 'r', encoding='utf-8') as file:

json_data = json.load(file)

except FileNotFoundError:

print(f"File not found: {file_path}")

sys.exit(1)

except json.JSONDecodeError:

print(f"Failed to decode JSON from the file: {file_path}")

sys.exit(1)

entries = []

for chunk in json_data.get('chunks', []):

start_time = chunk.get('timestamp', [None])[0]

end_time = chunk.get('timestamp', [None])[1]

text = chunk.get('text', '').strip()

# Skip entries without valid timestamps

if start_time is None or end_time is None:

print(f"Skipping entry in {file_path} due to missing timestamp.")

continue

entries.append({

'startTime': start_time,

'endTime': end_time,

'formattedStartTime': convert_timestamp(start_time, frame_rate),

'formattedEndTime': convert_timestamp(end_time, frame_rate),

'speaker': speaker,

'text': text

})

return entries

# Function to merge multiple transcripts and order them by start time

def merge_transcripts(transcripts):

merged = [entry for transcript in transcripts for entry in transcript]

# Sort merged transcripts by start time

merged.sort(key=lambda x: x['startTime'])

return merged

def main():

# Ensure script is being run with the correct number of arguments

if len(sys.argv) < 3:

print("Usage: python script.py speakers episode")

sys.exit(1)

# Retrieve command-line arguments

speakers = sys.argv[1].split(',')

episode = sys.argv[2]

# Directory where the JSON files are located

base_dir = os.getcwd()

# Parse transcripts for each speaker

speaker_transcripts = []

for speaker in speakers:

file_path = os.path.join(base_dir, f"{speaker.lower()}.json")

print(f"Reading: {file_path}")

speaker_transcripts.append(parse_json_transcript(file_path, speaker))

# Merge transcripts and sort them by start time

merged_transcript = merge_transcripts(speaker_transcripts)

# Output merged transcript to a file

output_file = f"episode-{episode}.txt"

with open(output_file, 'w', encoding='utf-8') as file:

for entry in merged_transcript:

line = f"[{entry['formattedStartTime']} - {entry['formattedEndTime']}] {entry['speaker']}: \"{entry['text']}\"\n"

file.write(line)

print(f"Merged transcript saved to {output_file}")

if __name__ == "__main__":

main()To use this script, unzip it to a folder on your computer where the json files are, then navigate to that folder in the Windows command prompt, and run the following command:

python transcript_merge.py "narrator,barbara,mister-loan,nyn" 5The number 5 in this instance means it will name the resulting session file session-5.txt, and the comma-separated names represent the names of each json file of the party members.

Congratulations! You now have a full transcript of session 5 that NotebookLM can understand!

Feed the AI Goblins

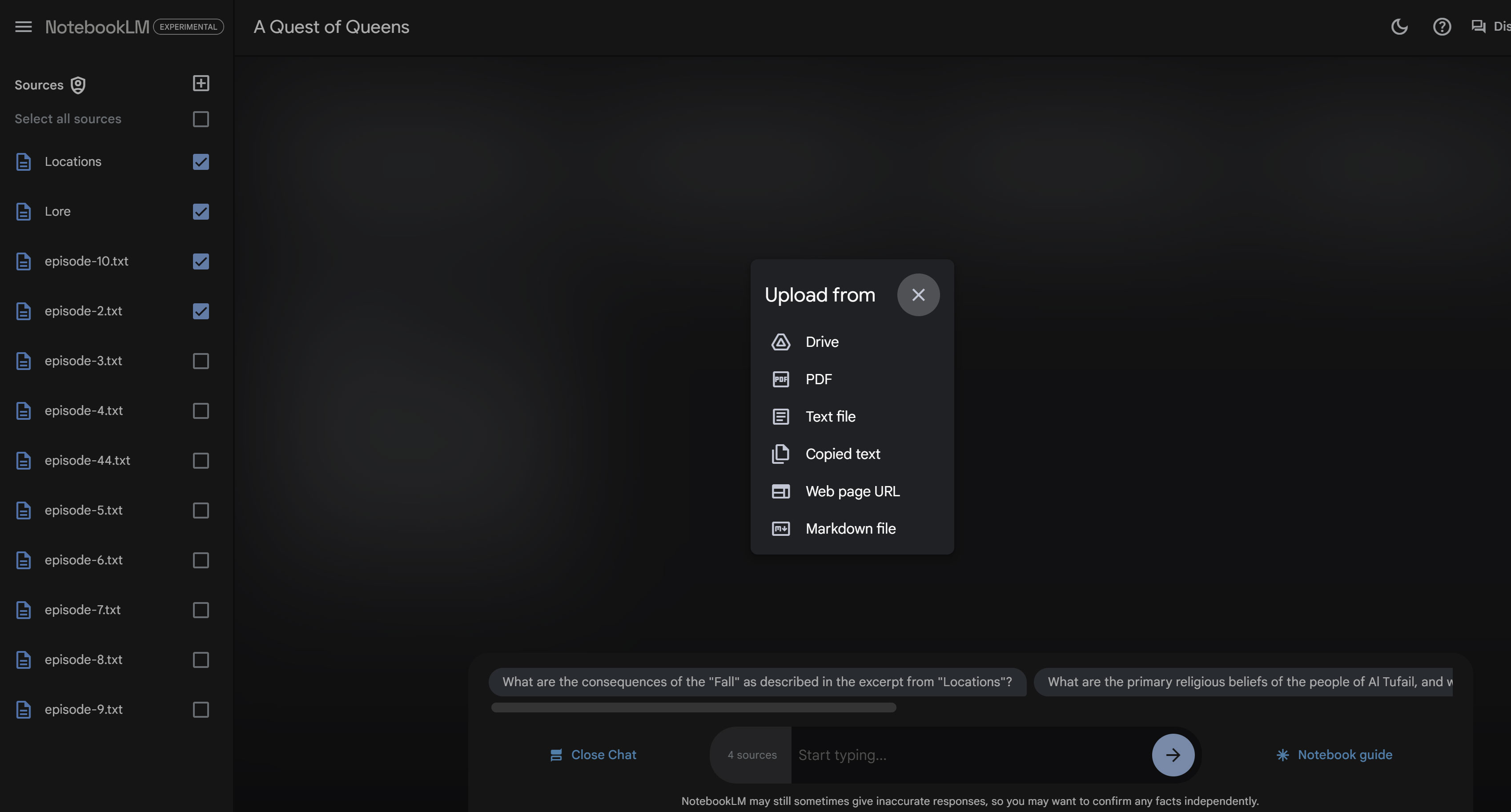

You can now go to NotebookLM and upload the session transcript:

Do this for all your sessions, and you'll be on your way to building your campaign's living knowledgebase!

Diarization & You

As I alluded to earlier, you may be in a situation where you don't have individual tracks for each speaker.

There's a couple ways you can go about this:

With Whisper AI:

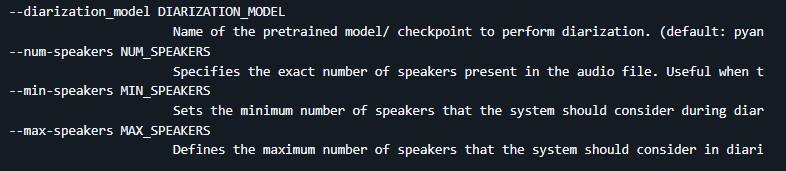

When you invoke Whisper AI with the Windows command prompt, you can give it additional parameters as outlined on the Github page for Insanely Fast Whisper to instruct the AI to automatically identify unique speakers. You have to specify how many speakers are in the audio.

I personally could not get this to work on my older tracks, which led me to...

USING Adobe Premiere:

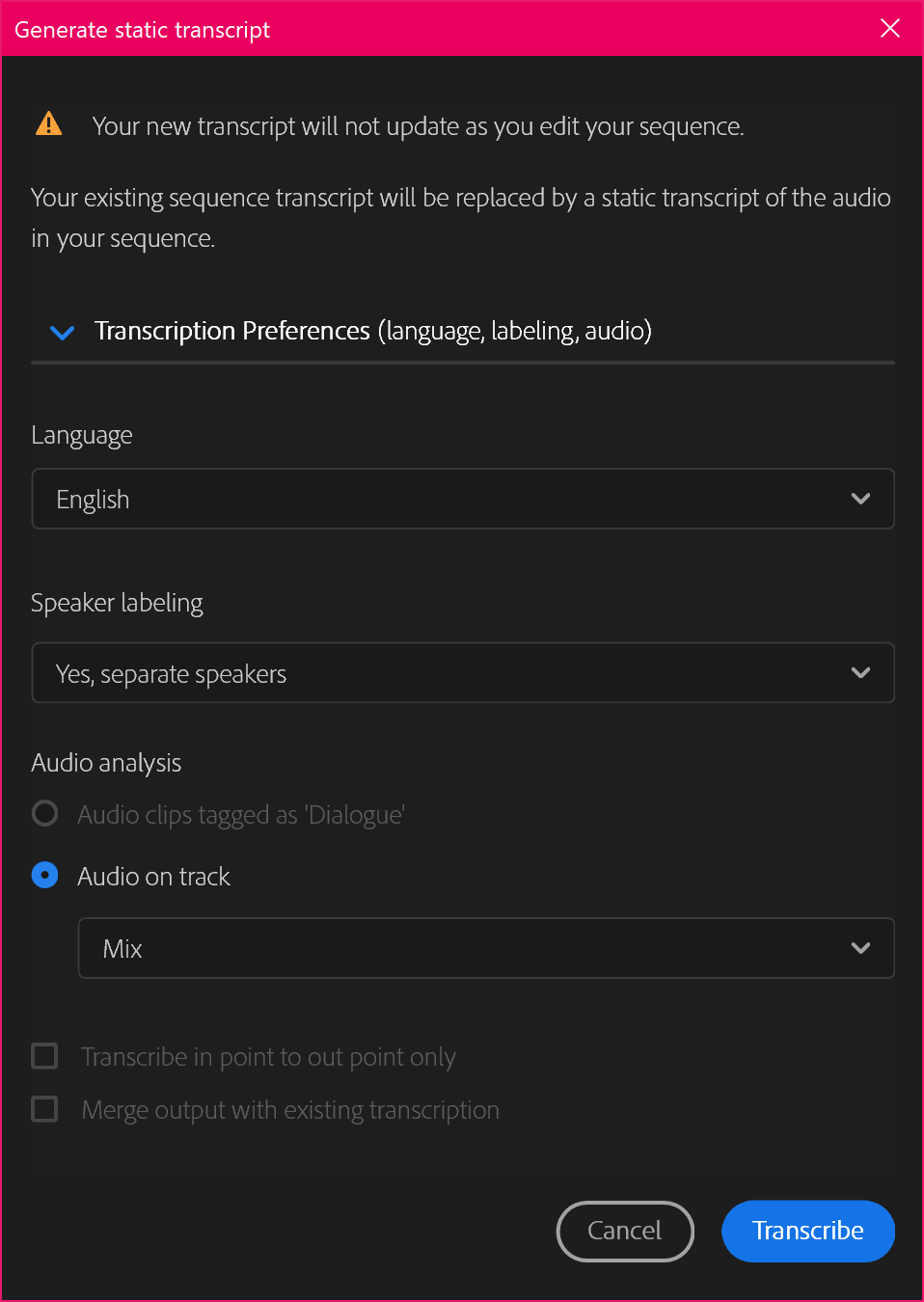

Premiere has diarization built into its automated transcription service. You can select the track that has all the speakers in it, then go to the Text window, click the Transcript tab, and under the three dots choose "Generate Static Transcript."

You want to choose "Yes, separate speakers" under the Speaker Labeling dropdown, and choose the track that has your audio with multiple speakers under Audio on Track. This will spit out a single text file, and Premiere will do its best to identify each speaker. They are blindly labeled "Speaker 1, Speaker 2, Speaker 3" and so on in the text file.

NotebookLM actually can make sense of the session despite the speakers not being labeled correctly through context clues, but you'll need to be more clever with your queries about what is happening. For example, you can't say "What did Barbara say to her captor at the end of the session" because nobody is named Barbara in the transcript. Instead, you can say "What did the speaker who was captured at the end of the session say to her captor" and Gemini will likely be able to tell you because of context clues.

Using the Knowledgebase

Now that you have your knowledgebase, what can you do with it? The Gemini chat is just like ChatGPT, although it's less creative. In my experience, Gemini under NotebookLM isn't any good at speculating—that is, if you have a session with name that was transcribed incorrectly, but your lore document has the correct name, you won't get far if you ask Gemini to compare two documents to see if it can make that correlation. Gemini is only concerned with relaying to you what it finds in the documents in a literal sense. And while it can suss out themes and other overarching ideas from context, it's not good at deduction. Which is fine, because the more specific you are with your prompts, the better it will do at answering your questions.

Here's an example.

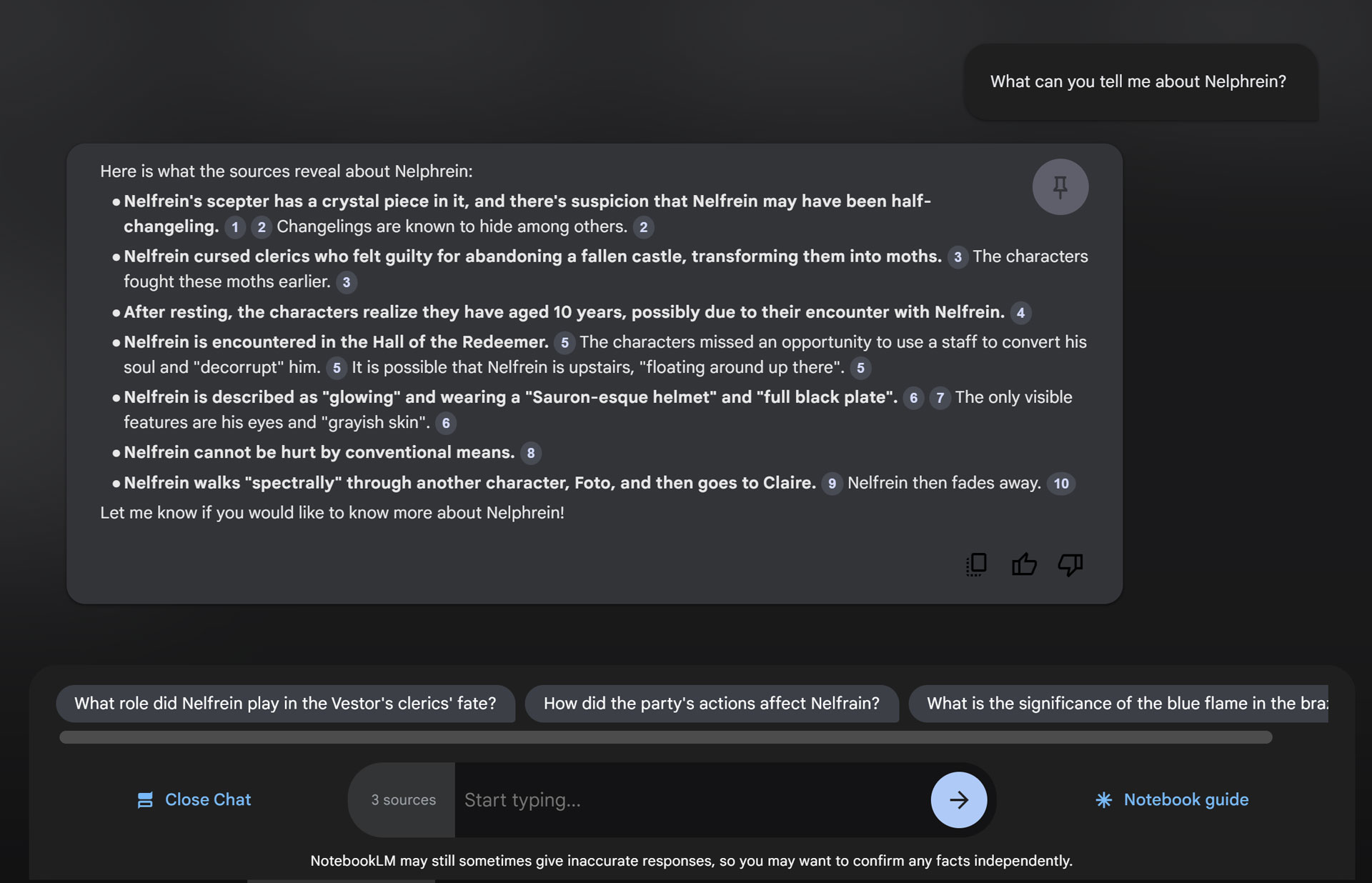

In one session, there is a monster called "Nelphrein" afoot, but the transcript absolutely does not spell that name correctly. And the monster is not mentioned by name when it's encountered later on in the session. My prompt here is very broad: "What can you tell me about Nelphrein"—and honestly I'm surprised it was able to glean what it could, considering that the transcript butchers that name:

Everything it says here is correct, except that it's confusing Nelphrein with another villain the party faced near the end called Lord Paimon (who wears a "Sauron-esque helmet" and "full black plate"—as I described him, verbatim).

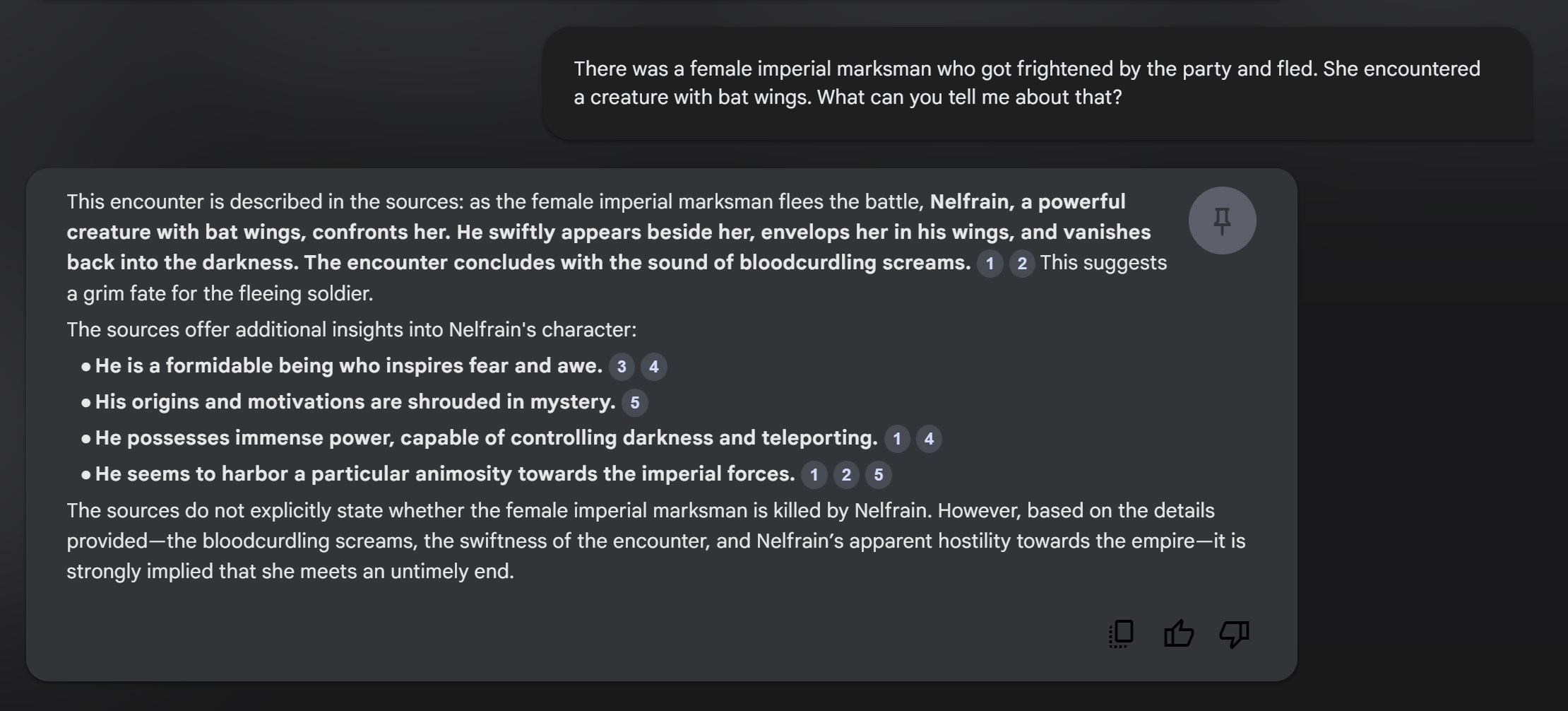

But let's see what I get when I'm more specific: "There was a female imperial marksman who got frightened by the party and fled. She encountered a creature with bat wings. What can you tell me about that?"

Here it narrows down on a piece of the transcript and absolutely nails it.

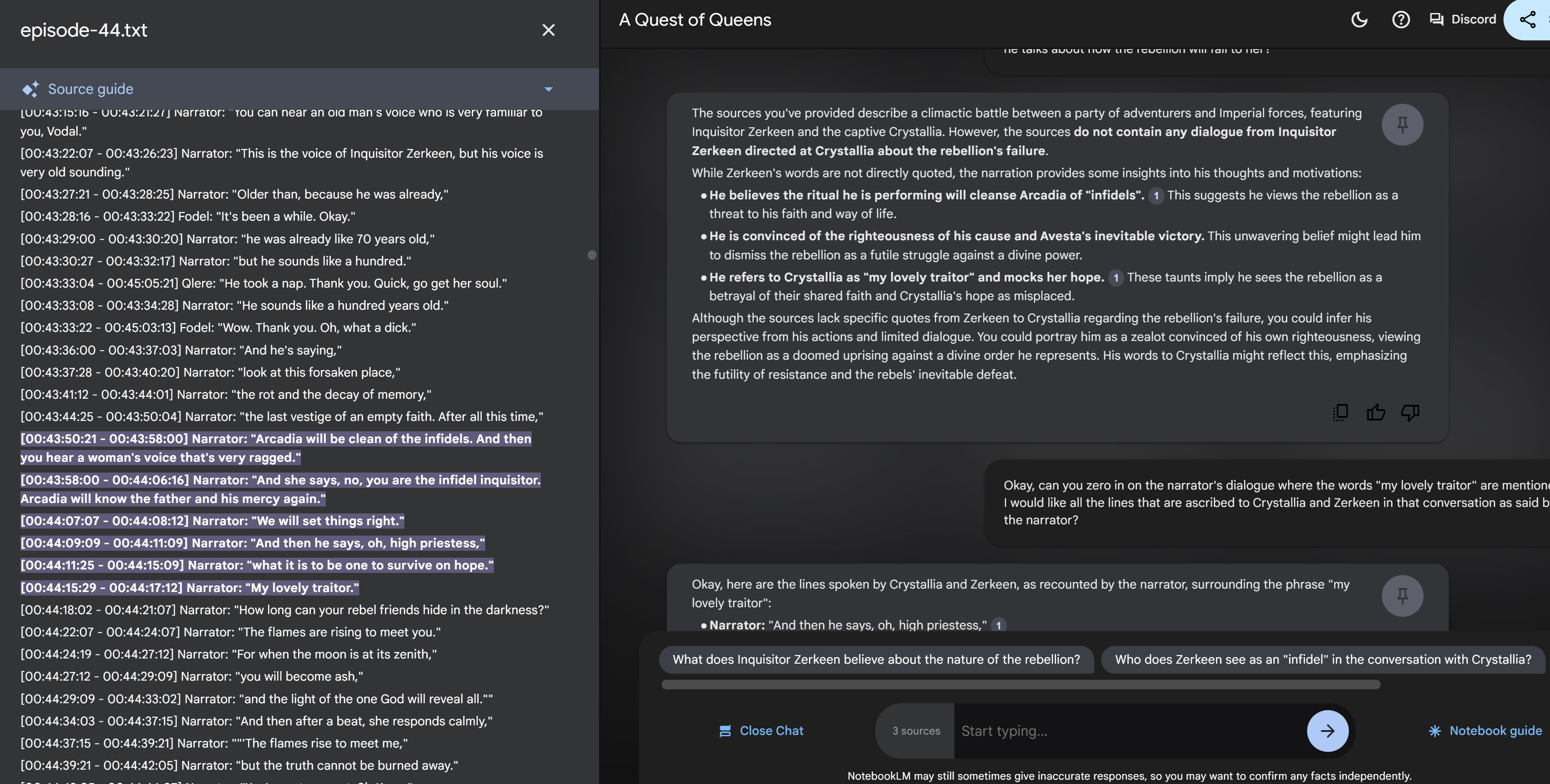

The last example I find especially powerful is getting quotes. I tend to "prime" Gemini by giving it a few words I remember, or perhaps a context of what was going on when I suspect a quote was uttered so that I can get it to provide citations from the source material. Gemini is not creative in the context of NotebookLM, so if it can't discern that someone said exactly what you're asking for, it will tell you it can't find such a thing in the transcript. So you have to poke and prod the AI to steer it in the right direction:

Voila! My prompting got me in the general proximity of the quotes I was looking for, and by clicking on Gemini's citations I can see exactly what was said at that moment.

And that's it for a taste of my Quest of Queens notebook!

It's an (im)perfect (but previously IMPOSSIBLE) tool for a GM to have at his disposal when reviewing a campaign. And I've barely scratched the surface of what you might be able to do with it, given clever enough prompts.

I hope you consider integrating these powerful tools into your prep! Maybe you'll end up with more time for real life at the end of the day.

(But who needs that anyway?)

Archetypes

Archetypes Armor

Armor Classes

Classes Conflicts

Conflicts Cultures

Cultures Ethos

Ethos Flaws

Flaws Glossary

Glossary Kits

Kits Maleficence

Maleficence Origins

Origins Shields

Shields Skills

Skills Spells

Spells Stances

Stances Status Effects

Status Effects Tactics

Tactics Talents

Talents Techniques

Techniques Treasure

Treasure Weapons

Weapons

Hall of Heroes

Hall of Heroes Hall of Legends

Hall of Legends

Dungeons & Flagons

Dungeons & Flagons

0 Comments on

Using Whisper and NotebookLM to Build a Private ChatGPT For Your Campaign